Extra Configurations for Deploying Self-Hosted Sweep

This guide assumes you have already completed http://docs.sweep.dev/deployment/ (opens in a new tab) and have a working Sweep instance.

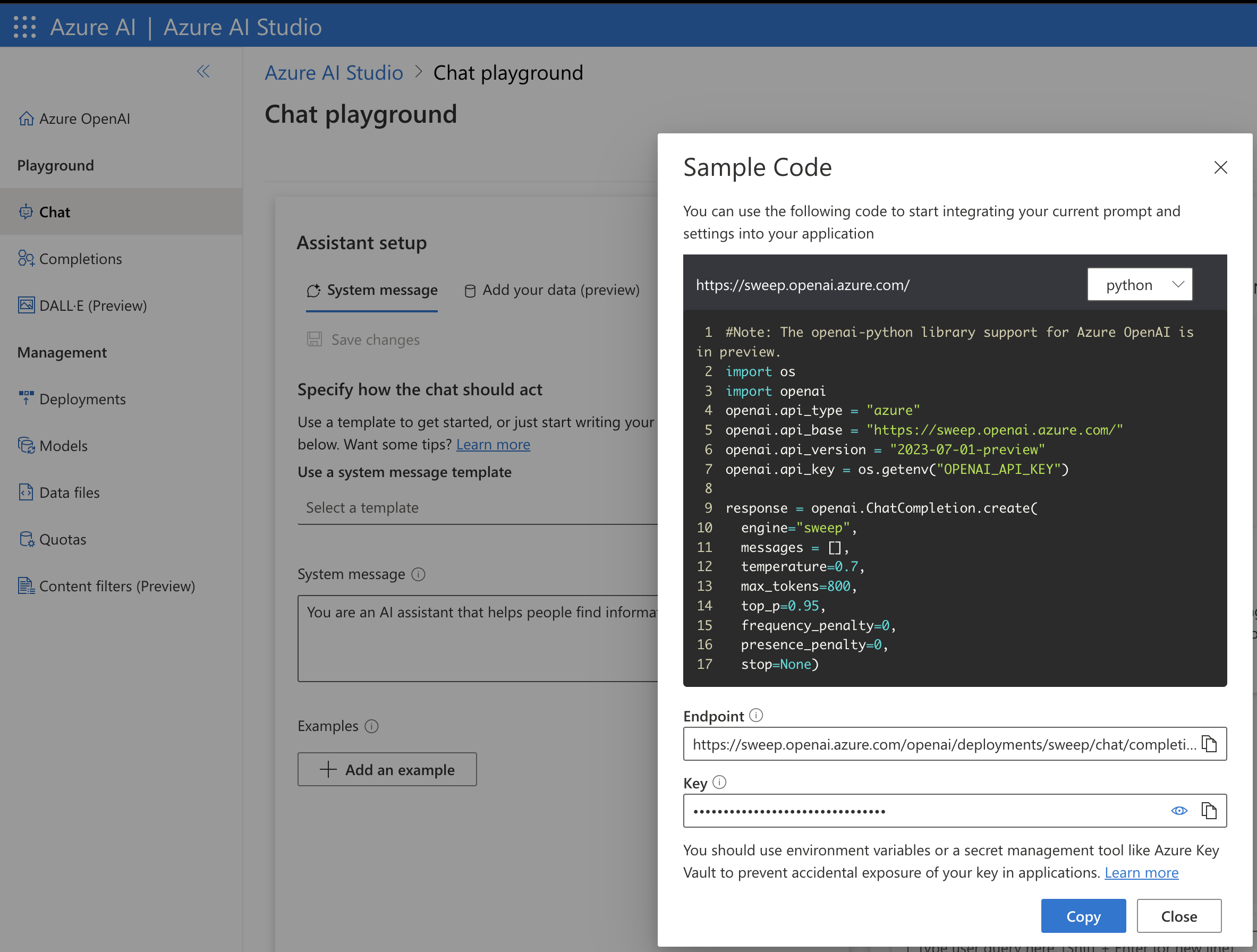

Using Azure for OpenAI

1. Go to your Azure deployment.

This can be found at https://oai.azure.com/portal (opens in a new tab) -> Chat(left tab) -> View Code(middle of the page)

You'll see something like the below:

2. Go to your Sweep deployment.

Edit your .env vim .env and fill in the following fields:

AZURE_API_KEY=<YOUR_KEY> # this can be found under "key" in the screenshot above

OPENAI_API_TYPE=azure

OPENAI_API_BASE=https://<YOUR_BASE_URL>.openai.azure.com/ # replace <YOUR_BASE_URL> with the url you see in the screenshot above

OPENAI_API_VERSION="2023-07-01-preview" # replace this with the latest if it's not 2023-07-01-preview

OPENAI_API_ENGINE_GPT35=YOUR_ENGINE_NAME # replace this for the models/engines you have access to

OPENAI_API_ENGINE_GPT4=YOUR_ENGINE_NAME # replace this for the models/engines you have access to

OPENAI_API_ENGINE_GPT4_32K=YOUR_ENGINE_NAME # replace this for the models/engines you have access to3. Configure GPT3.5 or GPT4-32K

If you only have access to GPT3.5 on Azure(or on OpenAI) fill in the following field:

OPENAI_USE_3_5_MODEL_ONLY=trueIf you happen to have access to GPT4-32K, fill in the following field:

OPENAI_DO_HAVE_32K_MODEL_ACCESS=true4. Test other LLMs

Huggingface, Palm, Ollama, TogetherAI, AI21, Cohere etc.Full List (opens in a new tab)

Create OpenAI-proxy

We'll use LiteLLM (opens in a new tab) to create an OpenAI-compatible endpoint, that translates OpenAI calls to any of the supported providers (opens in a new tab).

Example to use a local CodeLLama model from Ollama.ai with Sweep:

Let's spin up a proxy server to route any OpenAI call from Sweep to Ollama/CodeLlama

pip install litellm$ litellm --model ollama/codellama

#INFO: Ollama running on http://0.0.0.0:8000Update Sweep

Update your .env

os.environ["OPENAI_API_BASE"] = "http://0.0.0.0:8000"

os.environ["OPENAI_API_KEY"] = "my-fake-key"Note: All of Sweep's testing has been done on GPT-4. We've tested and on most of our prompts, even GPT-3.5 and Claude v2 breaks most of the time.